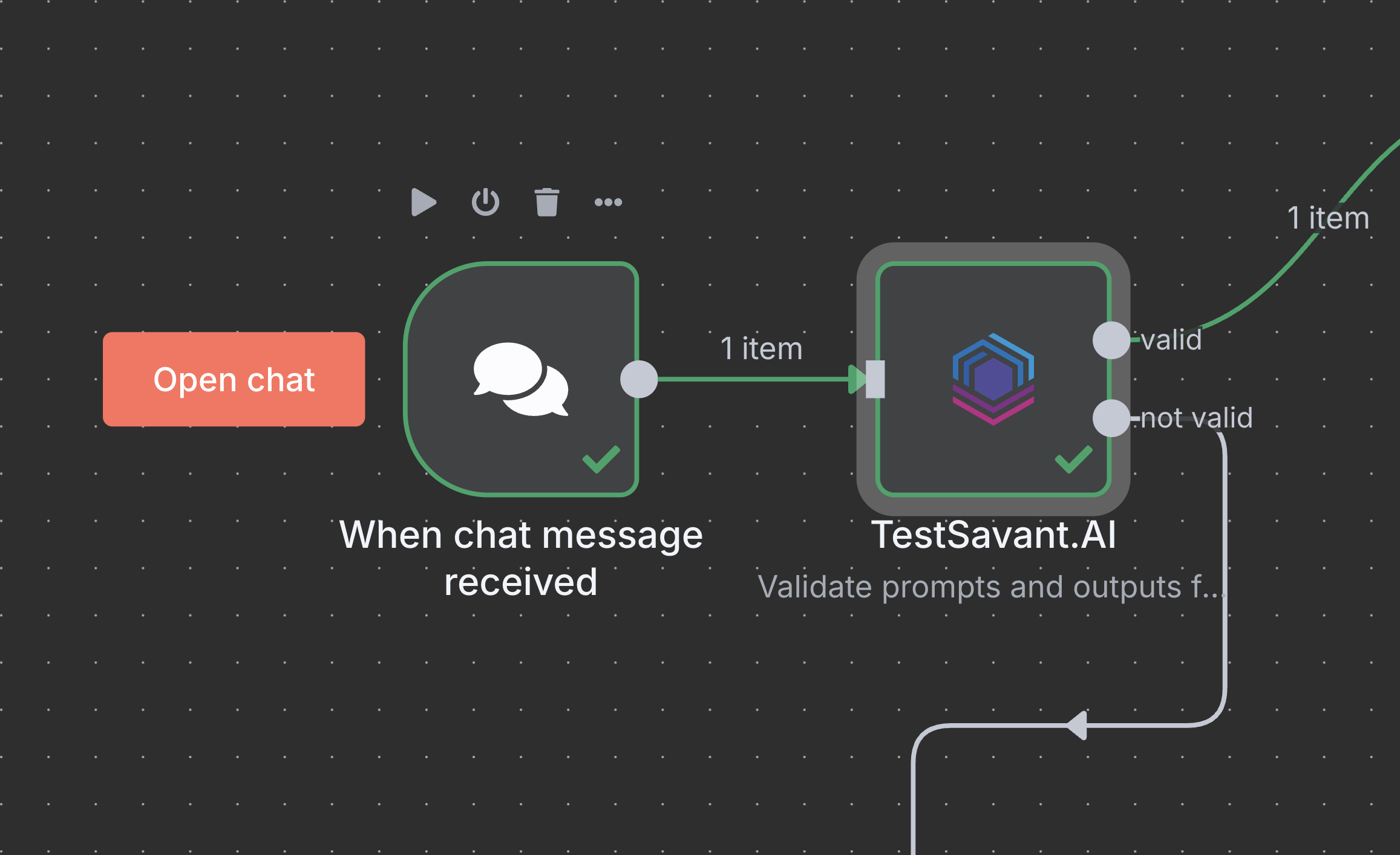

Connect prompts & outputs

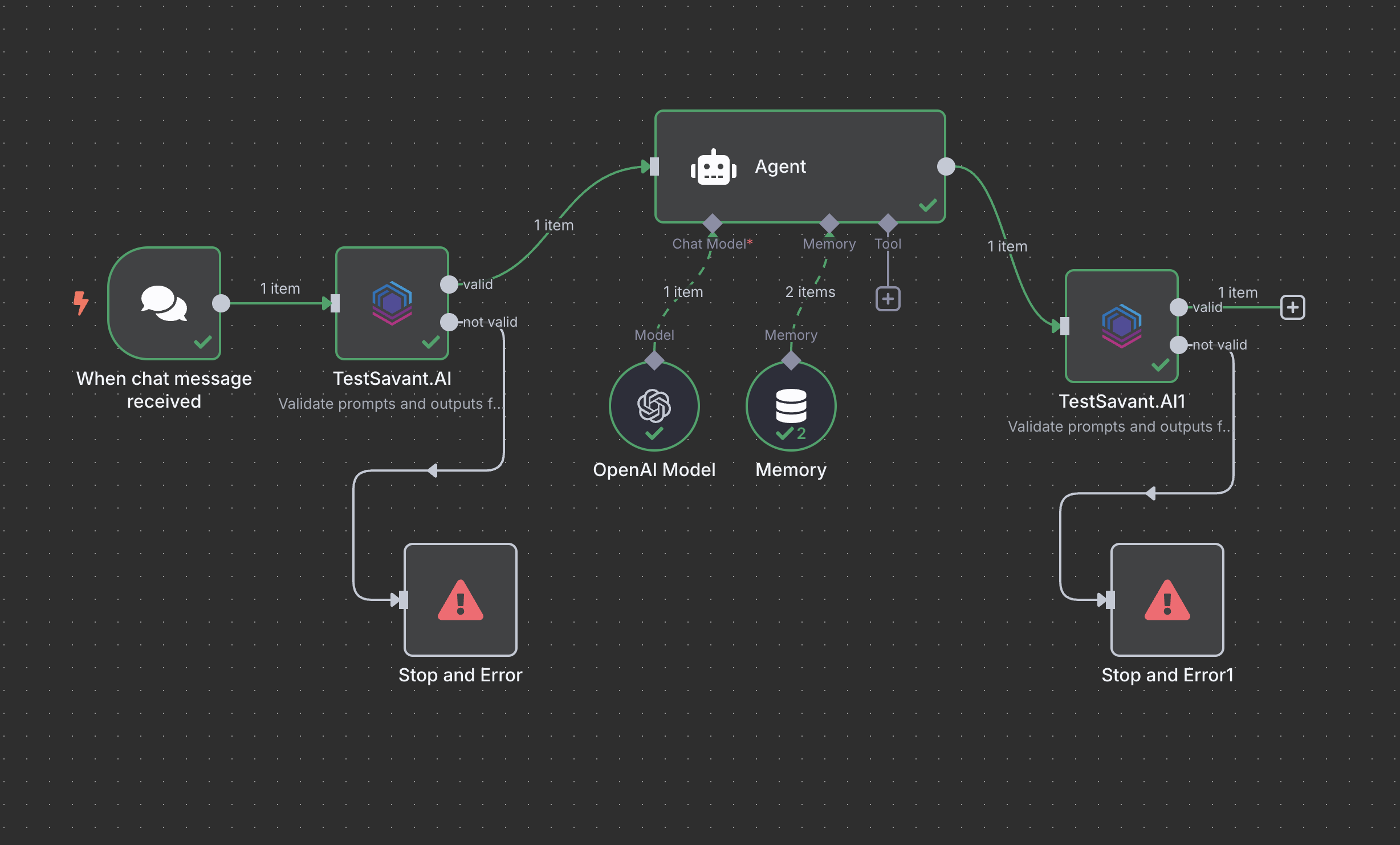

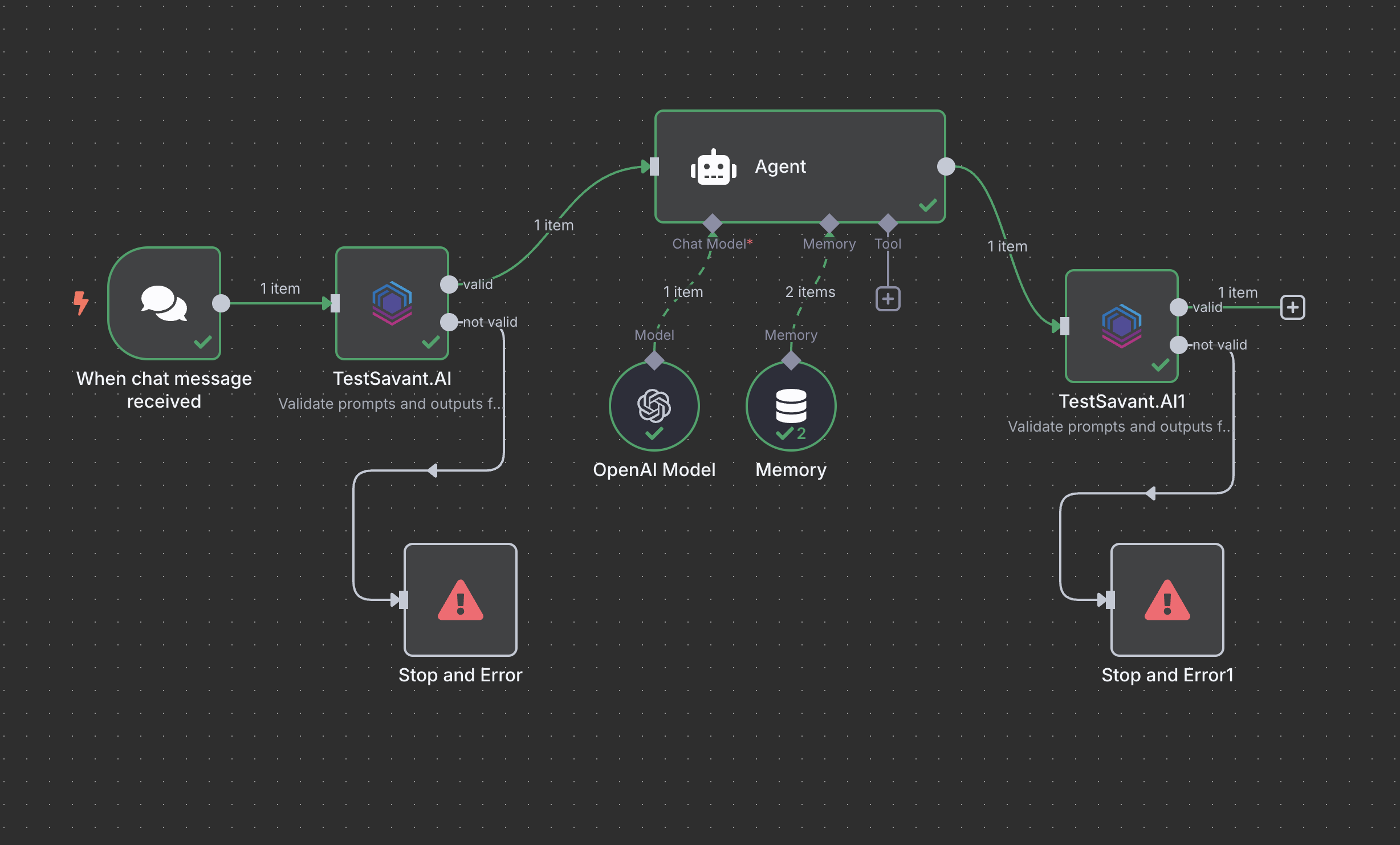

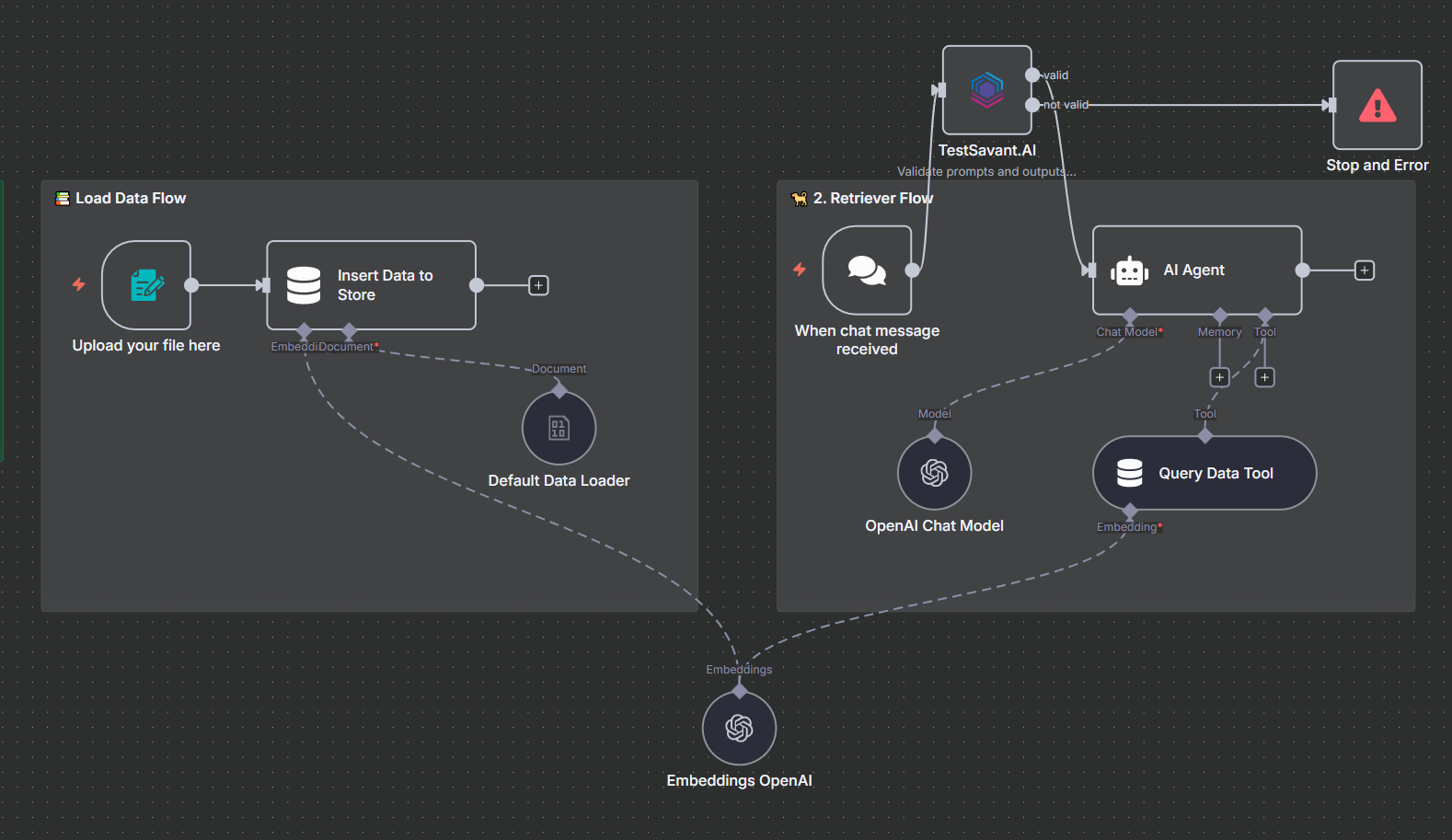

Illustrate how to wire guardrails before a prompt node or after an LLM output, including optional projects.

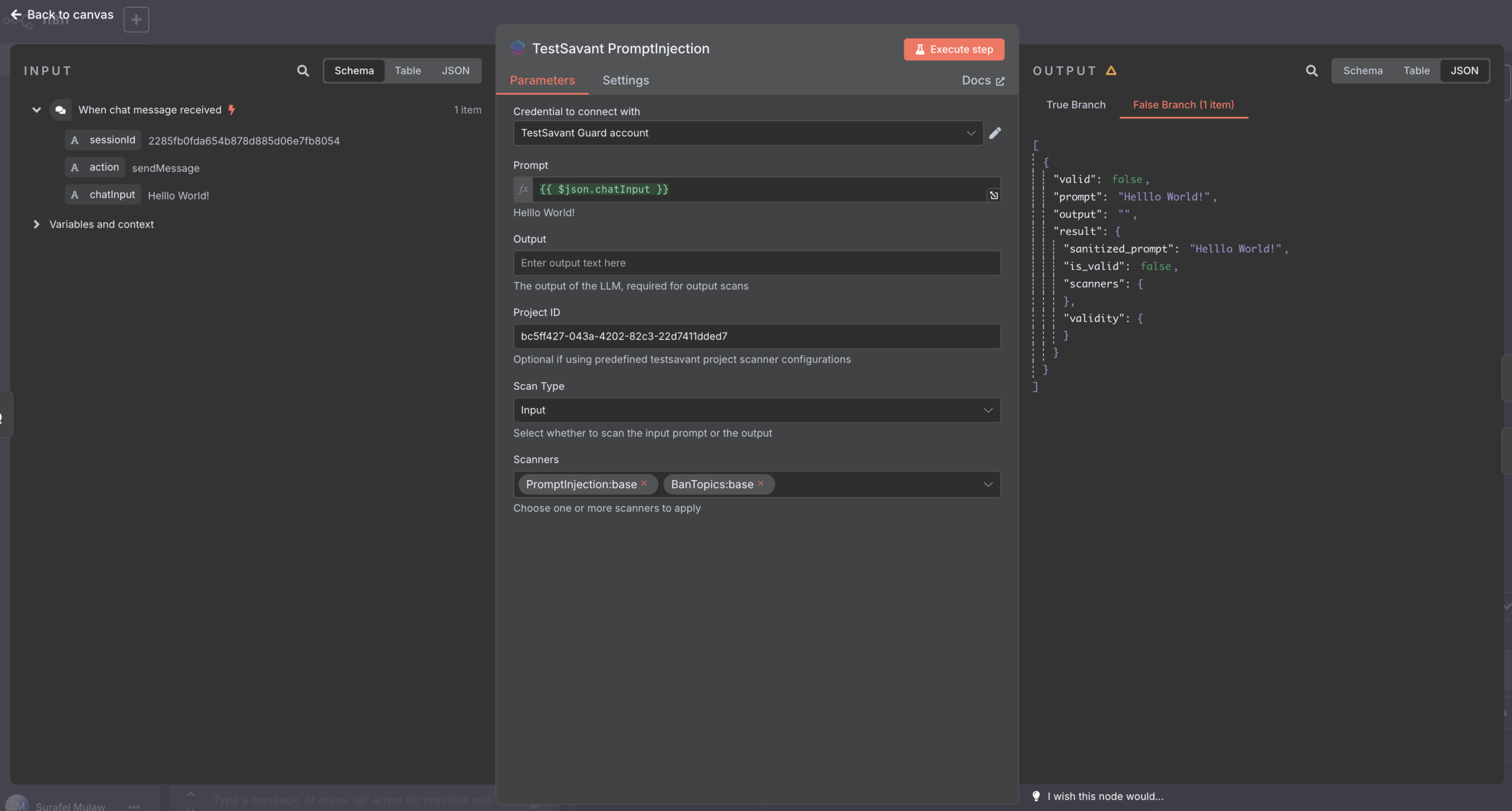

Drag TestSavant guardrail nodes onto your canvas, capture API credentials securely, pick scanners, reuse project defaults, and connect to any LLM prompt or output in minutes.

Replace each placeholder with your own screenshots to show operators exactly what to configure.

Illustrate how to wire guardrails before a prompt node or after an LLM output, including optional projects.

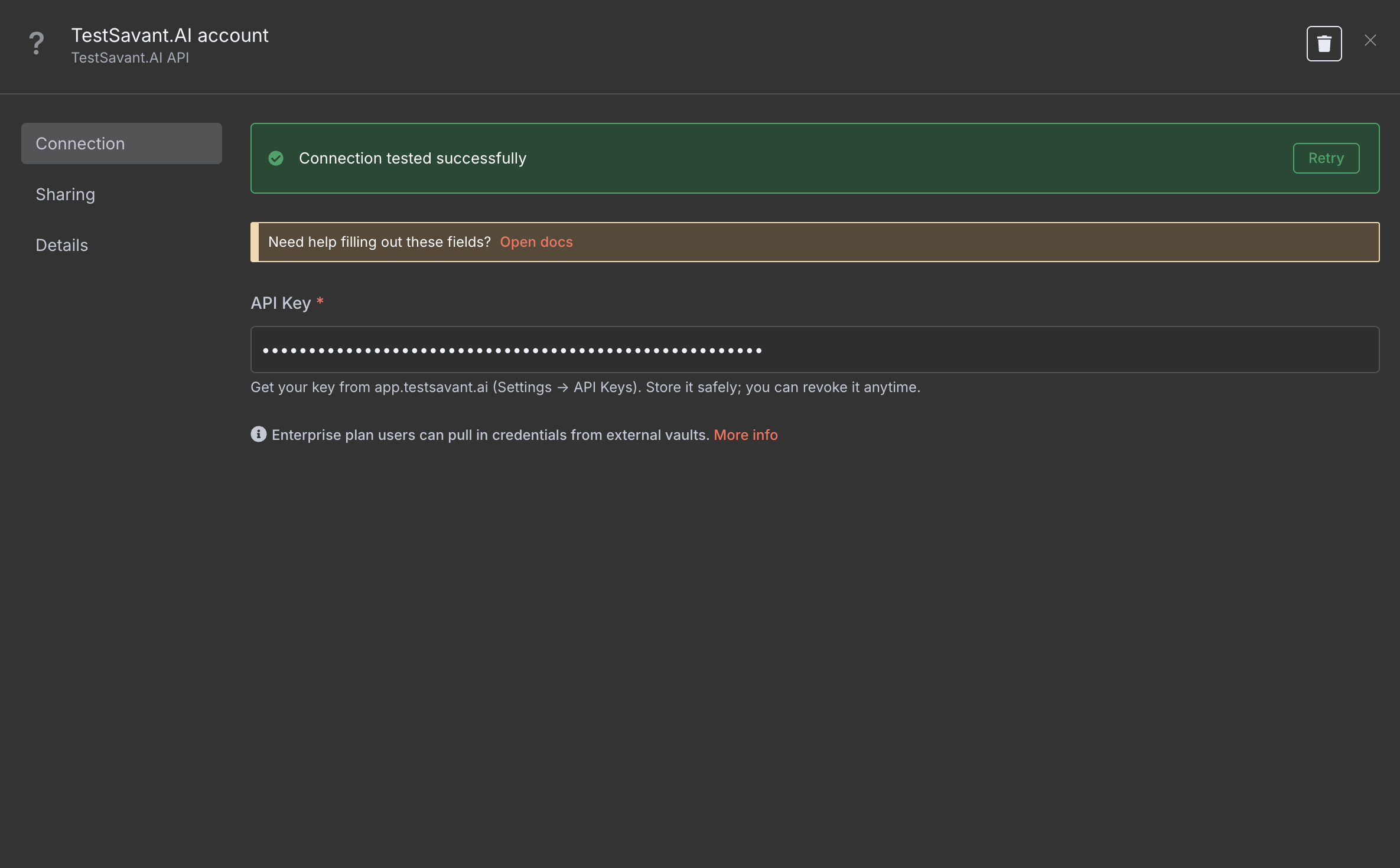

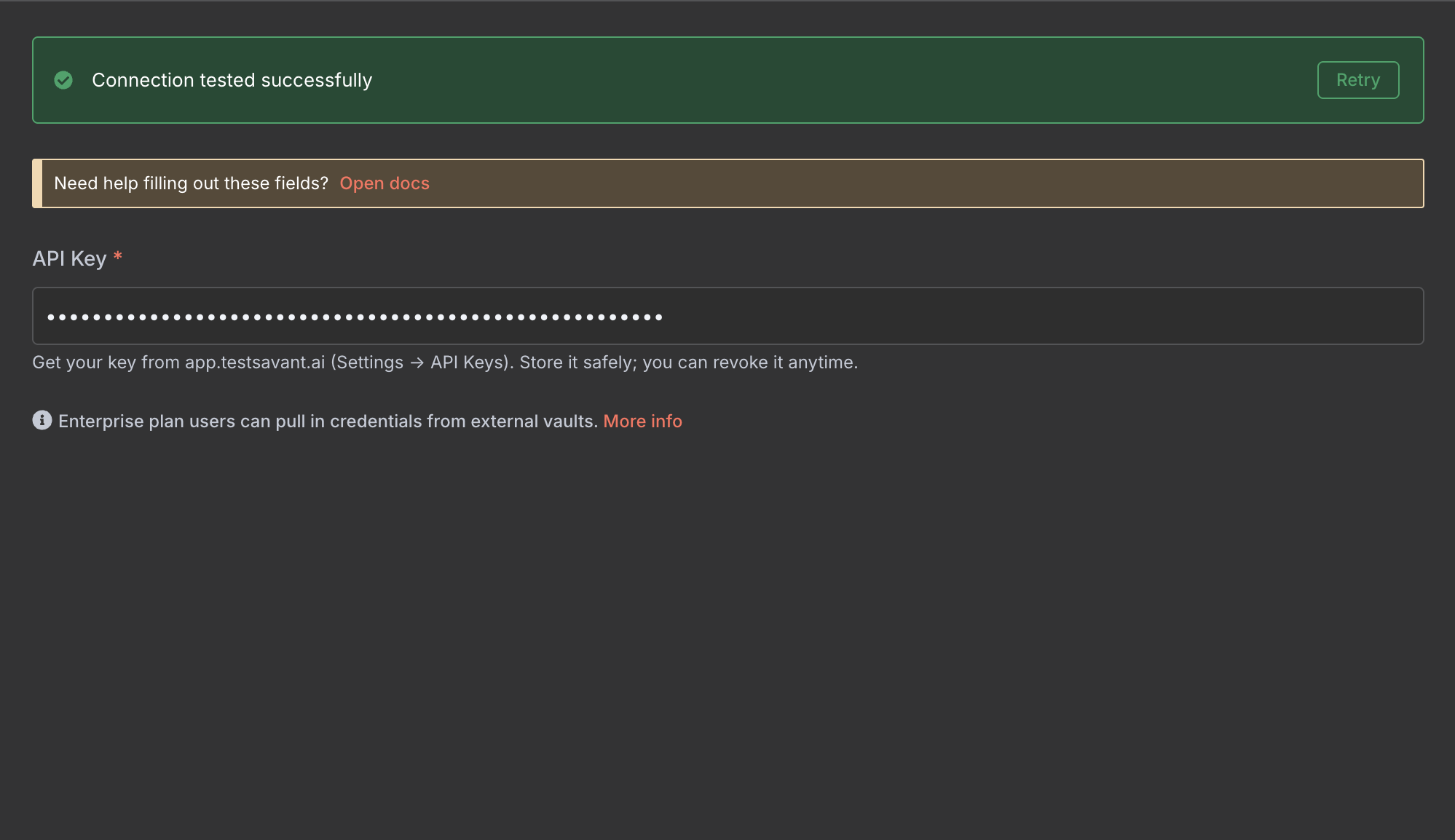

Display the credentials drawer with scoped key creation, rotation reminders, and audit visibility.

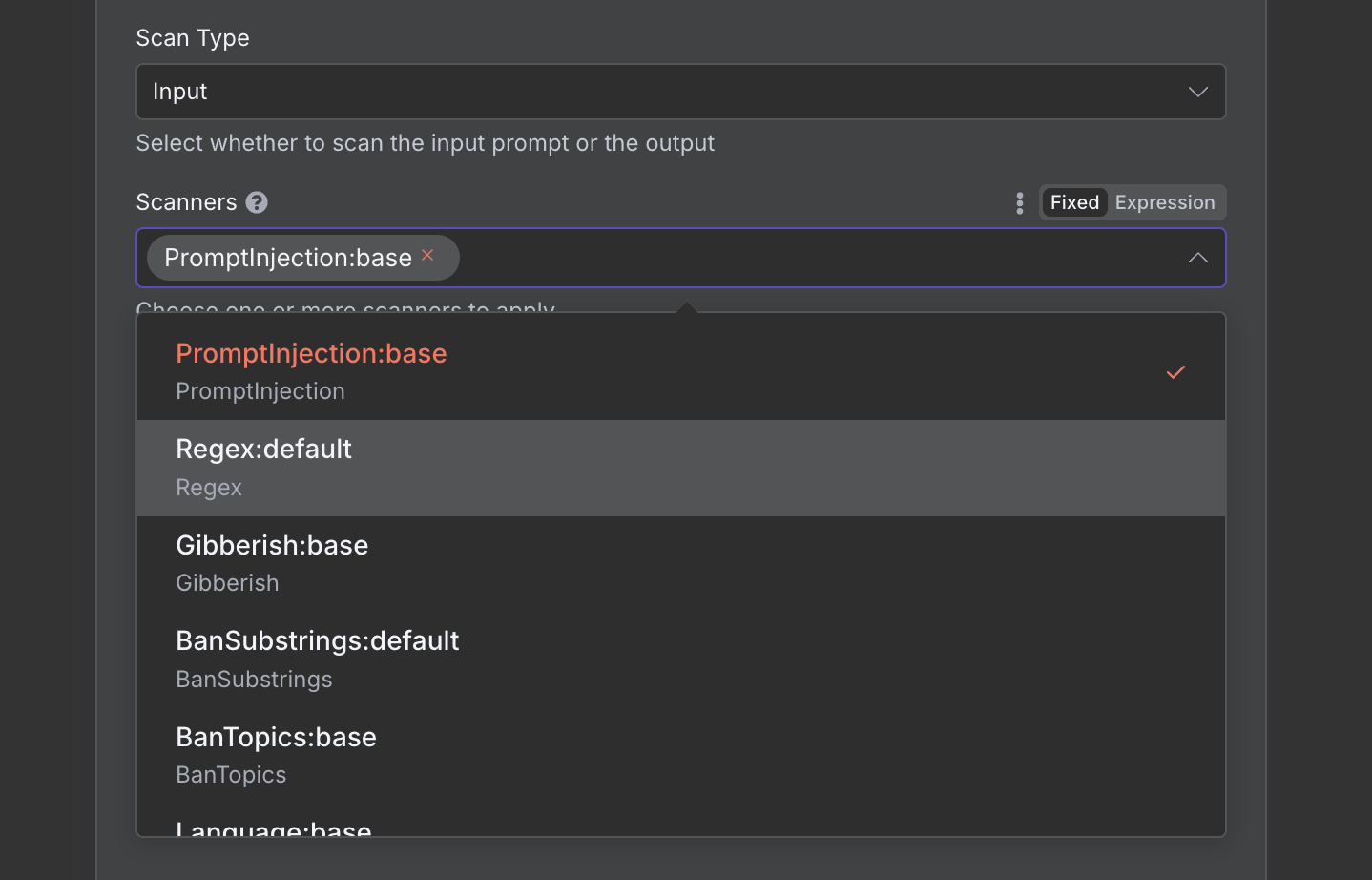

Highlight toggles for injection, leakage, safety, and compliance coverage with risk presets.

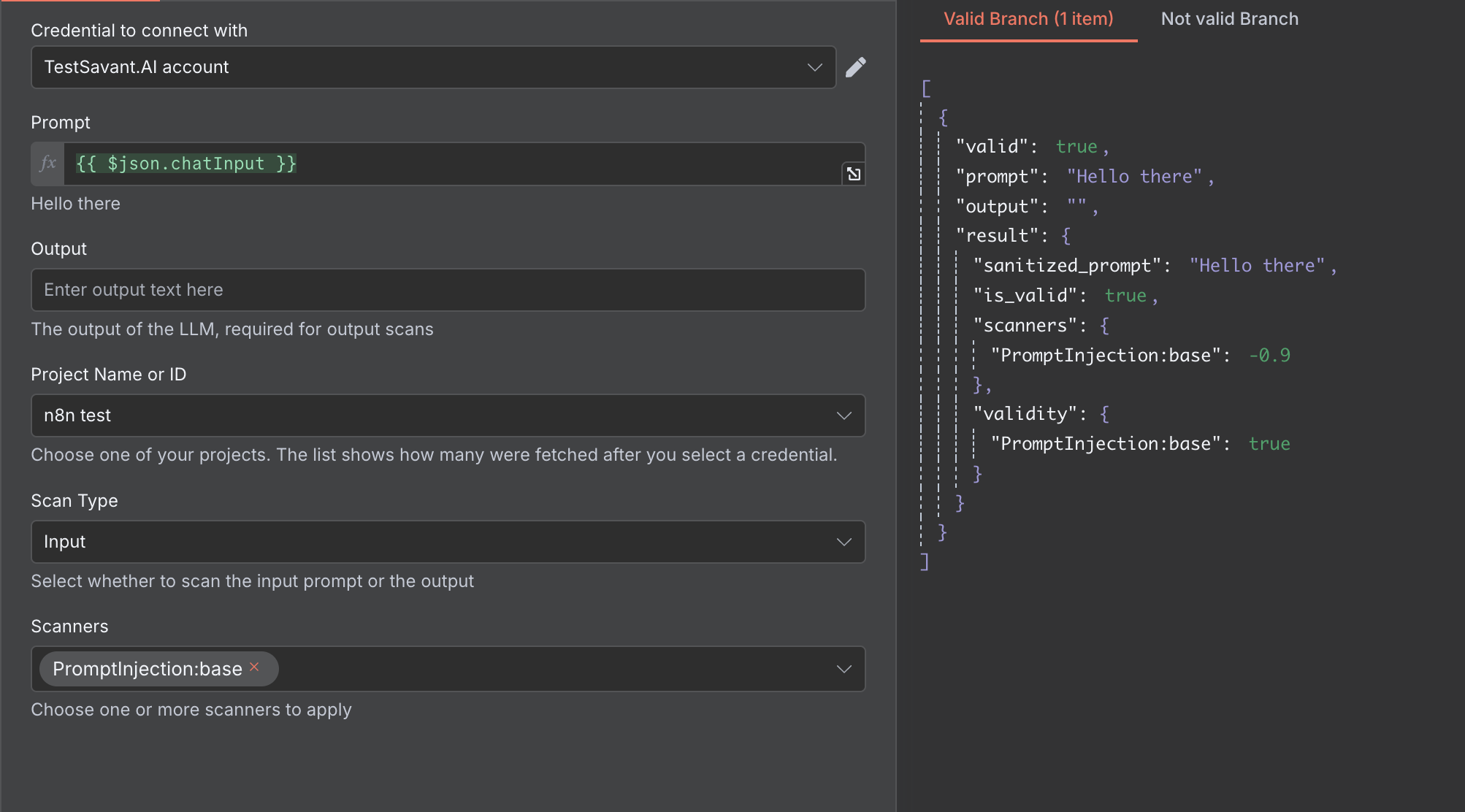

Show the audit trail panel that captures scanner results, reasons, and remediation guidance.

Paste TestSavant API keys into the secure credential helper. Each key can be limited to read, run, or configure guardrails per project.

Select which TestSavant scanners run on every request—prompt injection, toxicity, policy, data leak, and custom checks.

Tie each node to a TestSavant project so policies, scanners, and evidence routing stay consistent whenever you duplicate or update flows.

Wire guardrails directly to your LLM prompt input or response output. Support for text, tools, and structured payloads keeps every channel safe.

Guardrails sit between triggers, LLM calls, and downstream actions. Risky prompts are neutralized before they reach the model; unsafe outputs are transformed before anyone sees them.

Swap manual scripts for TestSavant guardrail nodes or webhooks. The platform handles scanners, policies, telemetry, and evidence without slowing you down.

Scan prompts before your LLM, agent, or tool node executes.

Transform or block responses prior to human delivery or tool chaining.

Keep flows async-friendly with lightweight allow / transform / block calls.

Export clause-mapped proof for auditors, customers, or internal review.

Use this diagram along with your own canvas screenshots to explain the flow from trigger to evidence.

Sanitize queries, enforce disclaimers, and block account‑takeover or data‑exfil attempts in chat flows.

Gate risky actions (send‑email, write‑file, post‑message) via webhooks; require approvals automatically.

Tenant‑scoped retrieval; minimum‑necessary access; require citations or transform responses.

They run in a low‑latency path designed for online traffic. Deep checks can be scoped per route.

No. Use our n8n custom nodes and simple webhooks—drag, drop, and go.

Yes. It works with major LLM providers, OSS runtimes, and tools invoked inside n8n.

Decisions and lineage are logged with evidence IDs; export clause‑mapped packs for audits.

Yes. VPC deployment and customer‑managed keys are supported; evidence can mirror into your trust portal.

Inline guardrails, instant observability, adaptive defense. Build faster—and prove it’s safe.