If you’ve ever asked a Large Language Model (LLM) to “make this prompt better,” you’re already a metaprompter. Metaprompting is the practice of using prompts to generate, refine, or manage other prompts. It’s a technique many of us use instinctively, but when applied systematically, it transforms how we build AI systems, moving us from simple Q&A to architecting complex, intelligent workflows.

We usually discuss prompts as if they were sentences. In practice, they’re programs, and the prompts that write or manage other prompts are metaprograms. If you’ve ever asked an LLM to “rewrite my question so it’s clearer,” or “tell me how to ask this,” you’ve already done meta-prompting.

In this post, we discuss the basics of meta-prompting and explore how it can be applied to solve real-world problems.

Meta-Prompting as Everyday Metaprogramming

Table of Contents

ToggleMetaprompting is something we do almost every day. It’s the conversation we have about the conversation we want to have. More concurrently, it is essential to define the following terms to be on the same page:

- Metaprogramming: writing programs that produce/shape other programs.

- Meta-prompting: writing prompts that produce/shape other prompts or workflows.

Metaprogramming is a very crucial programming technique that allows us to define complex behaviors in simple ways. Meta-prompting does the same thing, but in the prompt engineering workflow. Thus, the simplest meta-prompting is: we write a quick prompt that instructs the model to refine, plan, or decompose, the model returns more capable prompts plus suggested steps. That’s metaprogramming with natural language.

Here are some examples:

The Refiner: Improving Your Own Request

We’ve all been there. You write a clunky, vague prompt and get a useless response. Instead of trying again with another guess, you turn to the LLM itself for help. Here is an example:

Input Prompt (The one you want to improve):

"Write a script for a social media video about our new coffee maker."This prompt is weak. It lacks detail about the target audience, the platform, the video’s tone, or the coffee maker’s unique features. The result will likely be a bland, generic script.

Meta-Prompt (The prompt that fixes the input prompt):

"I need to improve the following prompt to get a better result from an LLM.

**Original Prompt:**

'Write a script for a social media video about our new coffee maker.'

**My Goal:**

I want a 30-second video script for Instagram Reels targeting busy young professionals (ages 25-35). The tone should be energetic and fast-paced. The script must highlight our coffee maker's main feature: it brews a high-quality espresso shot in under 60 seconds.

**Your Task:**

Rewrite the original prompt, incorporating all the details from my goal to make it specific, clear, and actionable for an AI. The new prompt should explicitly ask for scene descriptions, on-screen text, and a call to action."Here, you are using the LLM to craft a better tool (the prompt) for the job.

The Pathfinder: Asking How to Ask

Sometimes, the challenge isn’t refining a prompt but figuring out where to even begin. Faced with a complex subject, we can ask the LLM to create a roadmap for our inquiry.

Here’s that process in action:

Input Prompt (The vague starting point):

"Explain sustainable urban development."This prompt is a massive topic. An LLM might return a dense, textbook-like answer that’s overwhelming and difficult to digest. It doesn’t guide you through the learning process.

Meta-Prompt (The prompt that creates a learning plan):

"I want to understand the topic of 'sustainable urban development,' but I am a complete beginner. The initial prompt 'Explain sustainable urban development' is too broad for me.

**My Goal:**

Create a structured learning path for me using a sequence of 4-5 prompts. This path should start with the fundamental concepts and progressively build up to more complex and practical examples.

**Your Task:**

Generate a numbered list of prompts that I can ask an LLM one by one. The first prompt should define the core principles, and the following prompts should cover topics like green infrastructure, smart city technology, social equity in urban planning, and a case study of a real-world sustainable city (like Freiburg, Germany)."

This pathfinder approach uses the LLM’s intelligence not just to answer a question, but to teach you how to ask better questions. It breaks down an intimidating topic into a series of manageable steps, making the learning process far more effective.

In both cases, we’re operating one level above the primary task. We aren’t just asking for an answer; we’re asking for a better way to ask the question. This is the essence of metaprompting, but its power extends far beyond simple refinement.

Now that we have a basic understanding of what meta-prompting is, let’s see how we can use it to create a structure by which we manage various workflows. To do that, let’s start with a real-world analogy that we are all familiar with.

Architecting Intelligence with Metaprompting

Beyond a conversational trick, meta-prompting is a design paradigm. It allows us to impose structure, dictate workflows, and manage complexity in a way that a single, monolithic prompt never could.

From Simple Queries to Complex Workflows

Think of a large company like Apple. The CEO doesn’t manage the daily tasks of every engineer. Instead, they issue high-level directives to division heads (Hardware, Software, Services, Marketing). Each division head translates that directive into more specific goals for their teams. The head of Hardware might task the Mac and Vision Pro teams with distinct objectives. This hierarchical structure continues downward until it results in concrete, actionable tasks for an individual contributor.

This is a perfect analogy for a sophisticated metaprompting system. A high-level metaprompt doesn’t execute the final task. Instead, it directs and configures a network of more specialized prompts. As you move from the top of the management chain to the bottom, the “prompts” become less meta and more actionable.

Meta-prompting doesn’t just polish text; it creates structure, checklists, roles, interfaces, acceptance criteria. That structure lets you dictate a workflow for people or systems. LLM systems benefit from the same hierarchy: top-level prompts define objectives, policies, and interfaces; mid-level prompts translate them into domain-specific instructions; leaf-level prompts perform concrete actions.

The Power of Division: Why a Monolithic Prompt Fails

Why not just create one giant, all-encompassing prompt? Imagine trying to run an entire e-commerce business with a single instruction: “You are an e-commerce application. Do everything an e-commerce company needs.”

This monolithic approach is doomed to fail for the same reasons a company without departments would collapse. The division of labor, a critical advance in human civilization, is equally critical for AI systems. By breaking a large problem into smaller, specialized tasks managed by different prompts, we gain several desirable characteristics:

- Manageability: A system of smaller prompts is easier to understand, maintain, and update than one giant, tangled prompt.

- Evaluation: It’s far easier to assess the performance of a prompt that handles only customer returns than to evaluate a single prompt that does everything. You can test and score each component individually.

- Debugging: When a problem arises, you can isolate it to a specific component (e.g., the “product recommendation” prompt is failing) instead of trying to debug a massive, unpredictable system.

- Optimization: You can fine-tune and improve each specialized prompt independently, leading to overall system improvement. Furthermore, failures are contained; you fix one module without destabilizing all.

- Governance: In monolithic systems, auditing PII handling, safety policies, or compliance can be incredibly complex due to the interwoven nature of the code and data. On the other hand, breaking down a system into smaller, focused meta-prompts (or microservices, in a broader sense) significantly simplifies governance. Each meta-prompt handles a specific function, allowing for independent auditing of its PII handling, safety policies, and compliance measures. If a change is made to one meta-prompt, its impact on governance is localized and easier to track.

- Latency & cost control: Heavy tools only where needed; light tools everywhere else. For smaller, less complex tasks, we can leverage more cost-effective LLMs, reserving powerful and often more expensive reasoning LLMs for areas demanding advanced problem-solving, nuanced understanding, or complex multi-step processes. Note that applying powerful LLMs everywhere may not be a good idea in the first place, as they sometimes overthink and perform poorly on simple tasks.

Now that we conceptually understand how meta-prompting enables building structure in a workflow, let’s see an example of such a case.

A Simple Real-world Example: The AI-Powered Customer Support System

Let’s design an AI customer support system for an e-commerce store using a structured metaprompting approach. This system demonstrates three key dimensions: vertical hierarchy, horizontal specialization, and programmatic integration.

Monolithic approach (one mega-prompt)

To see the difference between a monolithic prompt vs an orchestrated meta-prompts, let’s start with a monolithic one.

SYSTEM: You are an e-commerce support agent for ACME Store. Handle every customer query end-to-end:

- Identify intent (returns, shipping, product info, refunds, warranty, account, fraud, other).

- Ask only necessary follow-ups.

- Retrieve/store facts consistently from the conversation.

- Obey ACME policy:

• Returns: 30 days, receipt preferred, gifts → store credit, exclude final-sale items.

• Shipping: standard 3-5 business days, express 1-2, international 7-14; delays may occur.

• Refunds: original payment method; processing up to 7 business days after approval.

- Tone: friendly, concise, step-by-step.

- Privacy: Never ask for full card numbers or passwords. Mask PII in your response.

- If unsure, offer escalation.

TOOLS: You do NOT have real APIs; when needed, ask the user for order ID, email, or product SKU, then proceed hypothetically and clearly mark assumptions.

TASK: Given the user's message, resolve fully. Provide one final answer only. Include short bullet steps and, if relevant, a next action request.

The problem with the above prompt is, it’s very difficult to improve. After an evaluation on various dimensions (since it’s a monolithic one), you aren’t happy with some aspects, such as the questions it generates for follow-up conversations. When you update the prompt to improve the follow-up question generation, you don’t have a guarantee that you aren’t negatively affecting the other capabilities. Generally, isolated optimization, debugging, and governance will become difficult. You will end up in a suboptimal system that is hard to maintain as you develop your workflow. In contrast, let’s see what a multi-level meta-prompting offers.

Multi-level meta-prompting

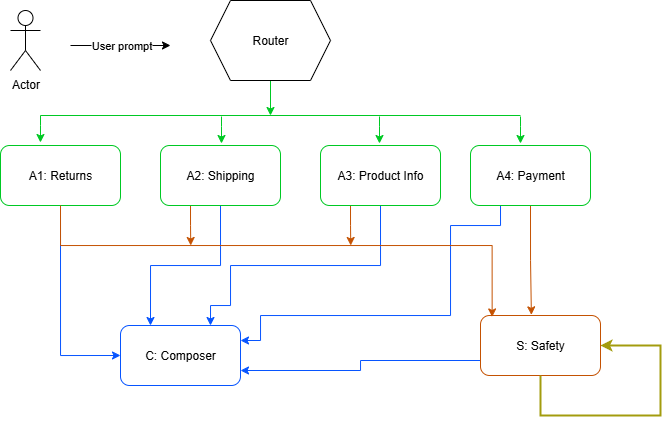

We split the problem into small, cooperating prompts. Each prompt will be a node in a graph where the graph represents the entire meta-prompting workflow, maybe including some other api integration. It can be viewed as a directed graph, occasionally incorporating cycles. Each node has a single responsibility and uses JSON schema, so code can route outputs deterministically.

Figure 1 shows how we set up these nodes. In its simplest form, we first lay out a hierarchical structure where data flows from the entry point, the initial node, typically the user prompt input. Note that you are not limited to having just one node at the start of the graph.

Figure 1: Schematics of a meta-prompting for a simple example ecommerce chatbot.

Here are the prompts for each node. These aren’t perfect prompts, but they show you the basic requirements that we need from each prompt.

R (Router): classify intent → JSON.

SYSTEM: You are the "Intent Router." Follow the global rules. Classify the user's message into exactly one category from:

["returns","shipping","product_info","payments_refunds","other"].

If ambiguous, choose the most likely and note ambiguity.

FORMAT: Return JSON only:

{

"category": "<one-of-above>",

"confidence": 0..1,

"required_followups": ["<short question>", ...] // empty if none

}

USER_MESSAGE: {user_message}

Sample output for R:

{"category":"returns","confidence":0.86,"required_followups":["What is your order ID?"]}

A1, Returns Specialist

SYSTEM: You are the "Returns Specialist." Follow global rules.

TASK: Given the user message and any known facts, draft steps to resolve a return.

CONSTRAINTS:

- Enforce: 30-day window; receipt preferred; gifts → store credit; final-sale excluded.

- Ask only minimal necessary follow-ups.

OUTPUT JSON ONLY:

{

"next_questions": ["..."],

"policy_summary": "...",

"resolution_steps": ["..."],

"caveats": ["..."]

}

USER_MESSAGE: {user_message}

FACTS: {facts_json}

A2, Shipping Specialist

SYSTEM: You are the "Shipping Specialist." Follow global rules.

TASK: Clarify shipping status or set expectations.

OUTPUT JSON ONLY:

{

"next_questions": ["order_id?","destination_country?"],

"eta_explanation": "std 3-5, express 1-2, intl 7-14, delays possible",

"resolution_steps": ["..."],

"caveats": ["..."]

}

USER_MESSAGE: {user_message}

FACTS: {facts_json}

A3, Product Info Specialist

SYSTEM: You are the "Product Info Specialist." Follow global rules.

TASK: Answer product questions clearly; avoid unsupported claims.

OUTPUT JSON ONLY:

{

"next_questions": ["sku?","color/size?"],

"facts_needed": ["materials","dimensions","compatibility"],

"answer_outline": ["..."]

}

USER_MESSAGE: {user_message}

FACTS: {facts_json}

A4, Payments & Refunds Specialist

SYSTEM: You are the "Payments & Refunds Specialist." Follow global rules.

TASK: Explain refund timing and payment issues; never request full card numbers.

OUTPUT JSON ONLY:

{

"next_questions": ["last4?","order_id?"],

"refund_policy": "original method; 7 business days post-approval",

"resolution_steps": ["..."],

"caveats": ["..."]

}

USER_MESSAGE: {user_message}

FACTS: {facts_json}

Prompt Synthesizer (meta-prompt that generates a prompt on the fly)

Goal: Build a context-specific instruction prompt for the Composer, tailored to the current case.

SYSTEM: You are the "Prompt Synthesizer." Your job is to generate a clear, task-specific instruction prompt for the Composer, using:

- The user's message,

- The chosen specialist's JSON (policy summary, steps, caveats, questions),

- The global strategy rules (brand voice, safety, policies).

REQUIREMENTS:

- Output plain text that will be used as the Composer's instruction prompt.

- Include: (1) objective, (2) tone, (3) must-include elements, (4) must-avoid elements, (5) structure for the reply (e.g., bullets + next action), (6) any disclaimers/caveats.

CONTEXT:

USER_MESSAGE:

{user_message}

SPECIALIST_JSON:

{specialist_json}

GLOBAL_RULES:

- Brand voice: friendly, concise, step-by-step.

- Returns: 30 days; receipt preferred; gifts → store credit; final-sale excluded.

- Shipping: std 3-5; express 1-2; intl 7-14; delays possible.

- Refunds: original method; ~7 business days post-approval.

- Safety: never ask for full card numbers or passwords; mask PII; escalate sensitive cases.

TASK: Produce a single instruction prompt for the Composer that tells it exactly how to craft the final customer reply for this case. Do not produce JSON. Do not produce the final reply--produce the Composer's instructions only.

Example output prompt

You are the Composer. Write a friendly, concise reply that resolves a RETURNS inquiry.

Objective: confirm eligibility under 30-day policy, collect minimal info, and outline steps.

Tone: friendly, step-by-step, no jargon.

Must include:

- Short acknowledgment of the situation.

- Minimal next questions from specialist JSON, starting with order ID if missing.

- Policy summary: 30-day window; receipt preferred; gifts → store credit; final-sale excluded.

- Clear resolution steps from the specialist JSON.

- One explicit next action for the customer.

Must avoid:

- Requesting full card numbers or passwords.

- Over-promising beyond policy; mask any PII.

Structure:

1) One-sentence acknowledgment

2) 2-4 bullet steps

3) "Next step:" line with exactly one action

4) If relevant, a short caveat

If ambiguity remains, ask one concise clarifying question first.

C (Composer): assemble the final customer-friendly answer.

SYSTEM: You are the "Composer." Follow global rules.

TASK: Turn specialist JSON into a friendly, concise reply with bullet steps and one clear next action.

INPUTS:

- user_message: {user_message}

- specialist_output: {specialist_json}

- brand_voice: friendly/concise

OUTPUT: Final customer message (plain text, no JSON). Keep it under 8 sentences.

S (Safety/Policy check): verify tone/policy/PII; if failed, ask C to revise.

SYSTEM: You are the "Safety Checker." Follow global rules.

TASK: Evaluate the candidate's reply for PII handling, policy compliance, and tone.

OUTPUT JSON ONLY:

{"safe": true|false, "issues": ["..."], "revision_tip": "<one-line tip if unsafe>"}

CANDIDATE_REPLY: {composer_text}

Orchestration Code:

Finally, using Python, we glue all these prompts into a working pipeline

# Pretend llm(prompt:str, **vars) -> str returns either JSON or text per prompt spec.

import json

def run_chatbot(user_message: str, facts: dict | None = None) ->; str:

# 1) Route

router_json = llm(ROUTER_PROMPT, user_message=user_message)

R = json.loads(router_json)

category = R["category"]

if R["required_followups"]:

# ask only what's necessary before proceeding

return "Quick question: " + " ".join(R["required_followups"])

# 2) Call the right specialist

if category == "returns":

spec_json = llm(RETURNS_PROMPT, user_message=user_message, facts_json=json.dumps(facts or {}))

elif category == "shipping":

spec_json = llm(SHIPPING_PROMPT, user_message=user_message, facts_json=json.dumps(facts or {}))

elif category == "product_info":

spec_json = llm(PRODUCT_PROMPT, user_message=user_message, facts_json=json.dumps(facts or {}))

elif category == "payments_refunds":

spec_json = llm(PAYMENTS_PROMPT, user_message=user_message, facts_json=json.dumps(facts or {}))

else:

spec_json = '{"next_questions":["Could you clarify your request?"],"resolution_steps":[],"caveats":[]}'

specialist = json.loads(spec_json)

# 3) Compose a customer-friendly reply

reply_text = llm(COMPOSER_PROMPT, user_message=user_message, specialist_json=json.dumps(specialist))

# 4) Safety check; one optional revision

gate = json.loads(llm(SAFETY_PROMPT, composer_text=reply_text))

if not gate["safe"]:

reply_text = llm(COMPOSER_PROMPT,

user_message=user_message,

specialist_json=json.dumps(specialist),

revision_tip=gate.get("revision_tip",""))

return reply_text

The meta-prompter (the Prompt Synthesizer) acts like a tiny compiler between the specialists and the Composer: it takes the user message, the specialist’s JSON plan, and the global rules, then generates a fresh, case-specific instruction prompt for the Composer on the fly.

This decouples what to do (specialist logic and policy) from how to say it (tone, structure, disclaimers), so you can tweak voice, guardrails, and reply format without touching domain logic.

It improves consistency by enforcing brand and safety rules at the last mile, reduces hallucinations by constraining the Composer to the plan, and makes A/B testing trivial, experiment with instruction styles while keeping the same specialist outputs.

Because the interface is JSON in and instructions out, it’s easy to log, audit, and measure, and safer to revise under the Safety gate. Net result: cleaner separation of concerns, faster iteration, and higher-quality, policy-aligned replies.

Summary

This post shows how a structured, multi-layered meta-prompting system turns AI red teaming from a collection of generic prompts into a repeatable, domain-specific testing pipeline.

The process begins by grounding every run in the business context, the relevant sensitive information, and the operator’s priorities.

That context drives the first meta-prompt, which converts broad goals into specific, testable objectives. A second meta-prompt expands those objectives into meaningful categories and subcategories that reflect real attack surfaces in that domain.

With objectives and categories in place, the system builds a master directive and example set that guide a second model in generating high-volume, high-quality probing prompts.

These probes are executed against the target system, scored against predefined success signals, and routed into a reporting and mitigation workflow. Analysts can intervene at any point, or the system can run autonomously within safety and budget caps.

The result is a scalable red-teaming capability that adapts to any environment, uncovers subtle vulnerabilities, and supports continuous AI safety improvement.